Rate limiting by IP using Cloudflare's rate limiting rules

My blog was showing poor performance, with some pages taking several seconds to load or even failing entirely.

Tailing the Heroku logs showed that it was getting a barrage of traffic to the /search/ page, which implements faceted search and hence has an almost unlimited set of possible combinations - /search/?tag=python&year=2023 and suchlike.

I have this in my robots.txt file precisely to prevent bots from causing problems here:

User-agent: *

Disallow: /search/

Evidently something was misbehaving and ignoring my robots.txt!

My entire site runs behind Cloudflare with a 200 second cache TTL. This means my backend normally doesn't even notice spikes in traffic as they are mostly served from the Cloudflare cache.

Unfortunately this trick doesn't help for crawlers that are hitting every possible combination of facets on my search page!

Thankfully, it turns out Cloudflare can also rate limit requests to a path.

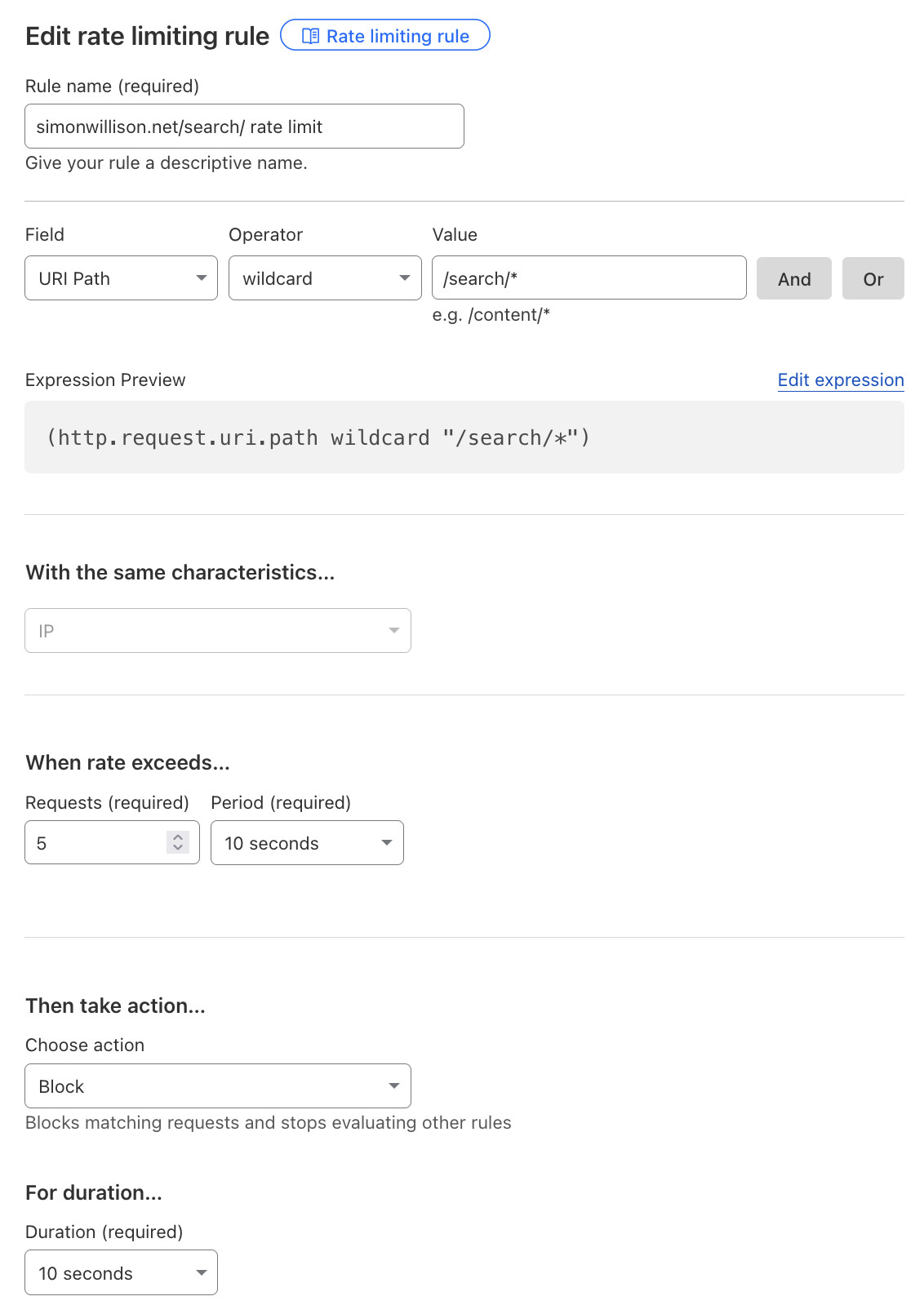

I found this option in the Security -> WAF -> Rate limiting rules area of the admin panel. Here's how I configured my rule:

Matching the URI path on /search/* and having it block requests from an IP if the rate exceeded 5 requests in 10 seconds appeared to do the job.

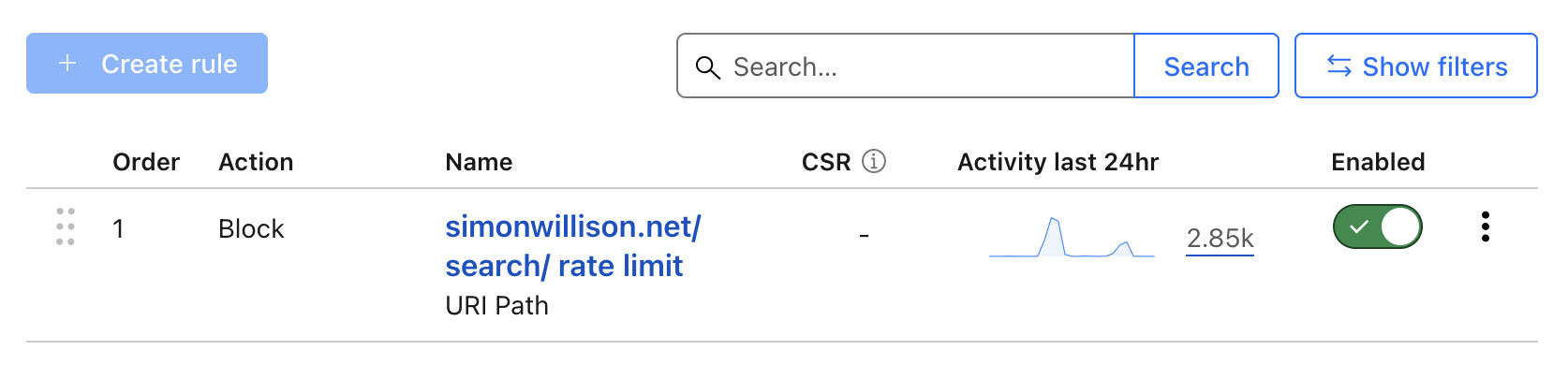

I've been running this for a few days now, and the Cloudflare dashboard shows that it's blocked 2,850 requests in the past 24 hours:

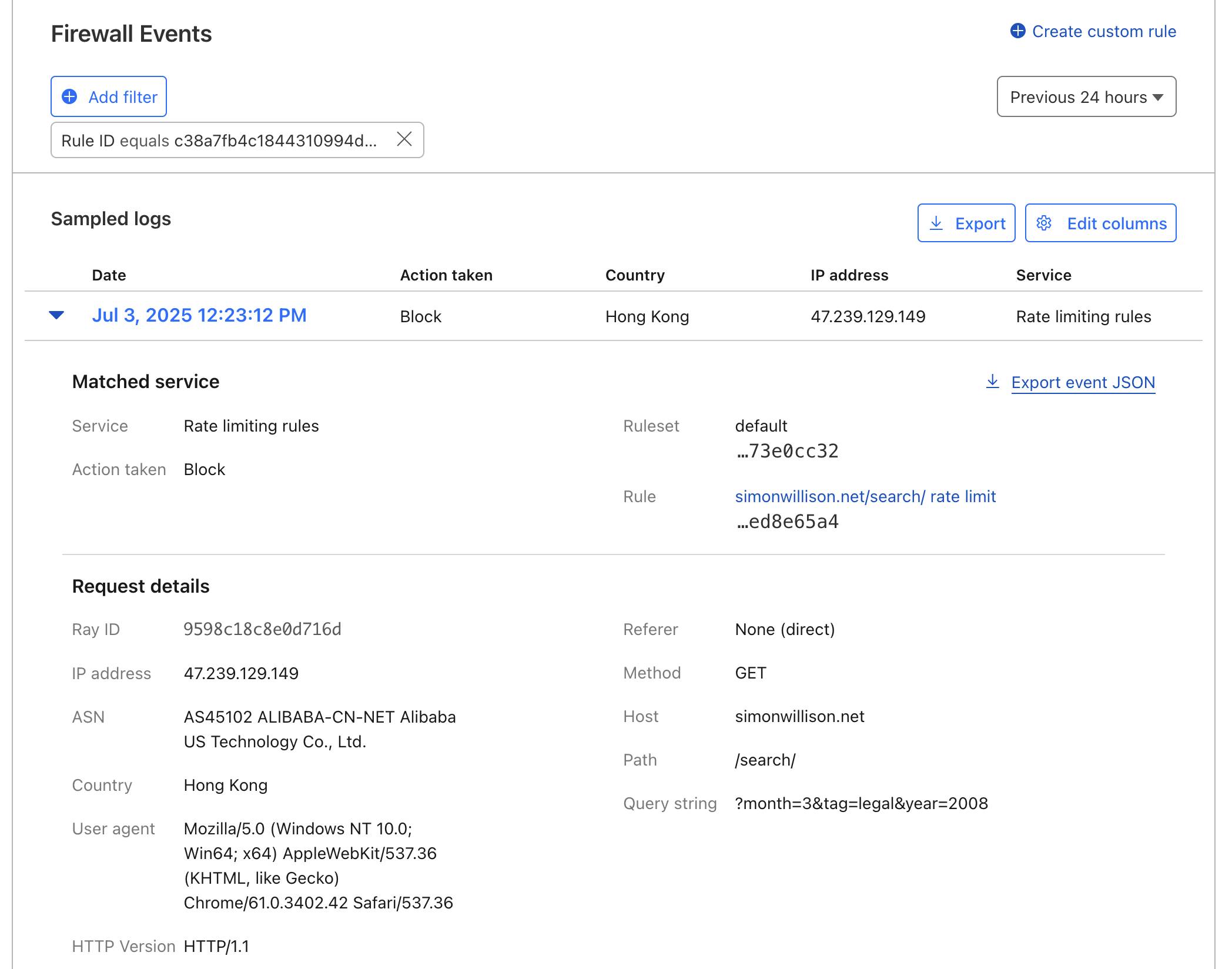

Clicking through shows the Cloudflare WAF logs, which provide detailed information about the blocked requests:

Created 2025-07-03T14:06:22-07:00, updated 2025-07-03T23:02:47-07:00 · History · Edit